React Speech Recognition

PocketSphinx Python is required if and only if you want to use the Sphinx recognizer recognizer instance. Available On: Windows. “Your good work is commendable, and frankly, I am a bit envious of your talents. Caution: The default key provided by SpeechRecognition is for testing purposes only, and Google may revoke it at any time. In our solution, this allows boost. Already, Speech to Text APIs like AssemblyAI are making ASR technology more affordable, accessible, and accurate. For an overview of how these components of Web development and interaction work together, see. It even outperforms models that were trained on non publicly available datasets containing up to to 21 times more data. The option is enabled by default so the selection indicator is shown. This is the same code that we used in our React component earlier. Speech recognition is of enormous help here. Error property contains the actual error returned. In the future when you run WSR, you can bypass the Control Panel and launch the app directly. Like fingerprints, individual’s have unique markers in their voices that technology can use to identify them. Google Speech Recognition. It is generally used in multilingual contexts or support centers where the system must understand different languages. I actually used Dragon Anywhere for some time, because I wanted to take my dictation out on the go, and at the time I had a Mac and could not afford Dragon Professional Individual to transcribe audio files. If it is too sensitive, the microphone may be picking up a lot of ambient noise.

Speech emotion recognition: two decades in a nutshell, benchmarks, and ongoing trends

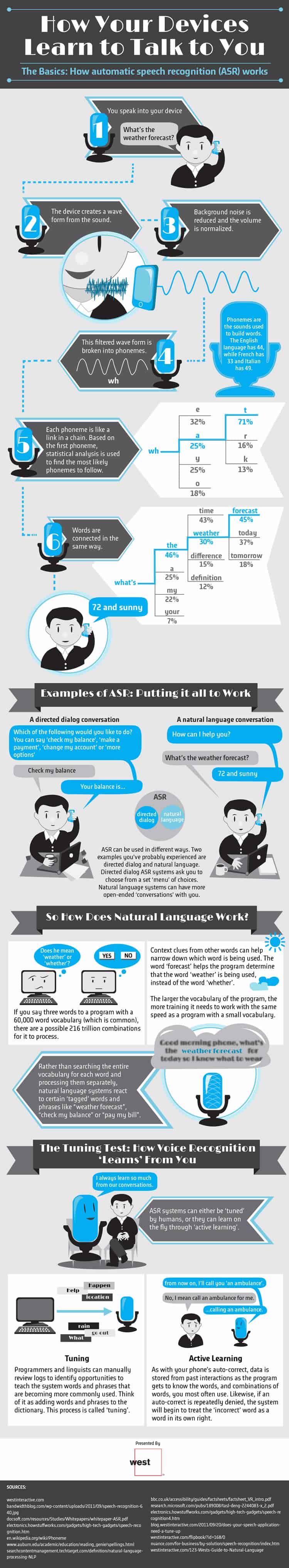

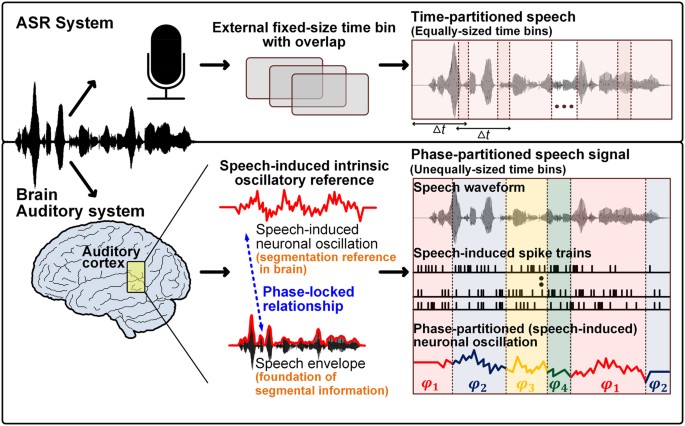

One of the major shifts that occur in 5G is cloudification of the core network elements through separating the network control functions from the data forwarding planes. The field of automatic speech recognition ASR is rapidly evolving, and there are several exciting developments on the horizon. These tools are invaluable for a variety of applications, such as speech recognition, audio processing, and music analysis. That requires speech recognition technology. Download PowerDirector 365 for free by clicking the link below if you want an easy way to create. Finally, a punctuation and capitalization model is used to improve the text quality for better readability. Users can invoke an Alexa skill with the invocation name for a custom skill to ask a question or with a name free interaction. It is fraught with political and interpersonal dynamics to approach someone even privately one on one today and gently suggest their career would get a huge boost if they hired a voice coach to help improve their verbal communication delivery. In general, content editors should avoid using images as links. Here is also a link to our Github. Connect with Matthew on Twitter and Google +. These are resampled at 16kHz and saved in the “resampled” folder. IBM has pioneered the development of Speech Recognition tools and services that enable organizations to automate their complex business processes while gaining essential business insights. For our testing, which is performed on English speech data, we use Whisper’s medium. Once the tool has been activated and the microphone enabled, you can begin dictating text. In the same way, you can turn it off by saying Stop listening or clicking the microphone button. An acoustic model implemented with HMMs includes transition probabilities to organize time series data. While speech technology had a limited vocabulary in the early days, it is utilized in a wide number of industries today, such as automotive, technology, and healthcare. 2 describes the most common speech features which are used in current state of the art implementations. During this past decade, several other technology leaders have developed more sophisticated voice recognition software, such as Amazon Alexa, for example. Pricing: Amazon Transcribe is free for 60 minutes per month for a year and costs $0. With more than 700,000 patent applications filed in the US in 2022, there’s a growing need for a game changing solution. Speaking will also help you finish your first draft faster because it helps you resist the desire to edit as you go. Js component to record in your app. In no current engines. Most people don’t need anything special. Correspondence to Mishaim Malik.

Conclusion

A lot of benefits can come from recognizing those who do good work so it’s important to prepare yourself ahead of time. Click the mic icon if you don’t see the prompt. This page was last modified on Jul 7, 2023 by MDN contributors. I just wanted to make myself clear here that you mean a lot to our team. Here’s a list of languages supported by speech recognition. You can access this by creating an instance of the Microphone class. Here are a few simple ways you can send those recognition messages. The humanity of individuals working for you should dragon naturallyspeaking reviews always be remembered, especially during formal events. They are mostly a nuisance. Facial recognition laws are literally all over the map. The goal is to get speech recognition to be more accurate and improve. A section may consist of one or more paragraphs and include graphics, tables, lists and sub sections. There are basic formatting voice commands you can also use with Siri. Fired when the speech recognition service has begun listening to incoming audio with intent to recognize grammars associated with the current SpeechRecognition. Learn how to create custom speech models using IBM Watson quickly — without knowing how to code. In addition to specifying a recording duration, the record method can be given a specific starting point using the offset keyword argument. It’s far easier just to click your mouse or swipe your finger. This is more than a regular loss function, because it consists of a distribution probability over the output symbols, but it also manages how the symbols succeed, from this point of view having a similar role to HMM. To install, simply run pip install wheel followed by pip install. The SpeechRecognition library acts as a wrapper for several popular speech APIs and is thus extremely flexible. Employee appreciation day is overrated. In all current engines. Otherwise, download the source distribution from PyPI, and extract the archive.

Demo

Use your voice to create and edit documents or emails, launch applications, open files, control your mouse, and more. Io provides an API that equips both businesses and individuals with sophisticated speech to text capabilities. That said, the accuracy and basic functionality of Google’s voice to text software is not all that different from what Windows and Apple have. HSBC launched voice biometrics as a security measure on their accounts in 2016. When you say a name, the phone doesn’t do any particularly sophisticated analysis; it simply compares the sound pattern with ones you’ve stored previously and picks the best match. The above examples worked well because the audio file is reasonably clean. You have demonstrated a wide range of skills among yourselves and are able to work together in such an organized manner. To start Windows Speech Recognition, say “Start listening. For higher sentence accuracy. With dictation software, you speak, and the software transcribes your words in real time.

RESEARCHER THOUGHTS

A wide number of industries are utilizing different applications of speech technology today, helping businesses and consumers save time and even lives. Uploaded Dec 6, 2023 source. Installing NVDA will allow for additional functionality such as automatic starting after sign in, the ability to read the Windows sign in and secure screens. However, note we work with our customers on a one by one basis to deliver customized models better suited to your content which will drive better quality over time. So, here is a quick rundown of voice and speech recognition, and the roles they’ll continue to play in technology of the future. DTW is a dynamic programming algorithm that finds the best possible word sequence by calculating the distance between time series: one representing the unknown speech and others representing the known words. Based on the innovative network structure TLC BLSTM, ASR leverages the attention mechanism to effectively model speech signals and improves the system robustness through the teacher student approach, delivering an industry leading recognition accuracy and efficiency in diverse scenarios in general and vertical fields. Wait a moment for the interpreter prompt to display again. The technology behind speech recognition has been in development for over half a century, going through several periods of intense promise — and disappointment. It captures longer dependencies than simple Transformers or than RNNs, achieving better performances on short and long sequences and a faster inference time. By default, NVDA can automatically detect and connect to these displays either via USB or bluetooth. In fact, the first attempts to create speech recognition tools date back to 1952. Where drivers may have been tempted to send a text behind the wheel, they can now connect to their car via Bluetooth and send a text hands free using speech recognition. With Riva, you can quickly access the latest SOTA research models tailored for production purposes. Again, this is completely un styled for better visibility. When evaluating speech recognition software, one must consider the following key features. You can also find all the code in the repo for this project. This is a problem in many hiking areas. Font: SerifSans SerifMonospaceCartoonOther. As is common, high resource languages fare better than low resource languages. This can be done by saying the number or using the keypad. A submit “search” button on one Web page and a “find” button on another Web page may both have a field to enter a term and list topics in the Web site related to the term submitted. The decoder is a conventional recurrent based one, but having a particularity regarding the training step: the previous ground truth is used instead of the real previous prediction. Am Rajeshwari a passionate blogger who loves to write about education, health. Along the way, speech recognition captures actionable insights from customer conversations you can use to bolster your organization’s operational and marketing processes.

Sriram

Devices such as Alexa, Google Home and Siri employ speech recognition to transcribe your thoughts into notes. To access your microphone with SpeechRecognizer, you’ll have to install the PyAudio package. For dictation, there are about 26 voice commands. Speech recognition technology provides the platform for us to communicate credibly, but it is up to marketers to make the relationship with their audience mutually beneficial. It’s no realistic for me to use that training data in any way with any compute I have access to. Thanks to NLU natural language understanding, voice assistants can understand any voice command, regardless of established rules, as long as the user’s intention is clear. Repeat this sequence until the grid centers over your target, then give the command “click”. And UK, French, German, Japanese, Mandarin Chinese Simplified and Chinese Traditional, and Spanish. I’m not aware of any simple way to turn those messages off at this time, besides entirely disabling printing while starting the microphone. By signing up you agree with our Privacy Policy. There are many different platforms on the market, but the most commonly used for dictating books is the suite of software from Nuance known as Dragon Dictation. One of these—the Google Web Speech API—supports a default API key that is hard coded into the SpeechRecognition library. Choose ‘allow’ to enable microphone access. We can also use perturbation techniques to augment the training dataset. The overwhelming responses getting from people for speech enabled products is crazy like from Amazon Alexa, Apple Siri, and even Fridges has the speech enabled products that can play valuable support in household tech in the present and also in the near future. CrossRef Google Scholar. You’ve seen how to create an AudioFile instance from an audio file and use the record method to capture data from the file.

Fluent aiʼs proprietary “speech to intent” technology directly maps a userʼs speech to their desired action without the need for speech to text transcription and a cloud connection Our text independent approach enables us to develop speech recognition systems for any language and accent that run completely offline and work accurately even in noisy environments

That said, wrangling external dependencies on Windows is veryannoying. Also, the next and previous line commands will move by paragraph accordingly. The time channel separable convolutions in Nvidia QuartzNet are also leading to a small number of parameters. However, the ideal format involves using human intelligence to fact check results that the artificial intelligence produces. If this transition occurs, expect ASR models to become even more accurate and affordable, making their use and acceptance more widespread. To start dictating, select a text field and press the ‘Windows logo key’ +’ H’ to open the dictation toolbar. The CNN blocks with front end role are followed by 12 blocks of factored TDNN TDNN F. In terms of language modeling, pipeline systems, as those based on Kaldi, are compulsory depending on a language model. Current text to speech models are typically trained on speech corpora that contain only a single speaker. Like an Alexa for your PC, you can ask it to do math calculations, play a song, report the weather, read an ebook aloud, and set alarms the possibilities are endless. The actual commands will not execute while in input help mode. While it’s commonly confused with voice recognition, speech recognition focuses on the translation of speech from a verbal format to a text one whereas voice recognition just seeks to identify an individual user’s voice. Vosk scales from small devices like Raspberry Pi or Android smartphone tobig clusters. End to end speech recognition using lattice free mmi, 2018, pp. For example, if you find you write 1000 words a day in the time that you have, imagine if you could double that each day. Set the probability of adding noise to 1 and specify the SNR range as dB. Voice to text software is speech recognition technology that turns spoken words into written words. The aims and scope of each journal will outline their peer review policy in detail. Unlike the KWS experiment, the MLPerf repository mandates that inference be performed with the validation dataset. In the Text to Speech tab, you can control voice settings, including. First, make sure you have all the requirements listed in the “Requirements” section. If you want to read more on HMMs and HMM GMM training, you can read this article. Interim results are results that are not yet final e. Openai required only if you need to use Whisper API speech recognition recognizer instance. Thanks for contributing an answer to Stack Overflow. AI voice recognition enhances the capabilities of customer service conversational AI channels. Last year Microsoft added a second speech recognition engine to Windows 10. This option is only available if reporting of spelling errors is enabled in NVDA’s Document Formatting Settings, found in the NVDA Settings dialog. Implementation of speech recognition technology in the last few decades has unveiled a very problematic issue ingrained in them: racial bias. While speech and voice recognition work differently, the two deeply intertwine to provide many cross functional capabilities to improve our daily lives and present possibilities for the future.

Conclusion

Namely, DIVIDE extracts discriminative information that represents the similarity of human speech by utilizing statistical models of human speech signals as a priori knowledge in front end processing. Try longer pauses between sentences. As the output suggests, your model should have recognized the audio command as “no”. Microsoft Windows Vista, 7, 8, 10, and 11 include a speech recognition feature in English, French, German, Japanese, Simplified Chinese, Spanish, and Traditional Chinese. If language compatibility isn’t an issue for you, or if you aim to maximize control over your PC, you may be better off using the newer system. The encoder takes input sequences, such as a speech waveform, and extracts useful recognition features. You can find the Vosk project homepage here. Yield in Python: An Ultimate Tutorial on Yield Keyword in Python. To do this, you have two paths to take. For companies who don’t have the time nor resources to achieve this, relying on open source alone may therefore not be the ideal move. Improve Video Quality Online: 3 AI Video Quality Enhancers You Can’t Miss. We applied multiple rounds of this process and performed a final cross validation filtering step based on model accuracy to remove potentially misaligned data. Leveraging state of the art machine learning, you can reach a global audience with high accuracy transcription and translation. Home automation: Smart homes can sync with voice assistants or mobile devices and allow you to issue voice commands to control lighting, heating, security and more. Create a culture that means business™. Alternatively, the first four lines of code can be collapsed to a single line by using the Choices constructor to initialize the instance with an array of string options, as follows. Where these are encountered in browse mode, NVDA will report “embedded object”, “application” or “dialog”, respectively. Cai and Wang worked together to filter and sort emotional data and wrote programs to complete the entire experiments. It’s one of the various methods for individuals to communicate with computers without typing. To see this effect, try the following in your interpreter.

Get the contents of the audio file as stream — or get an audio stream directly from a microphone input

When redacting audio content using CaseGuard Studio, there are a wide variety of features that can be utilized to get the job done effectively. It stores the converted text in your browser locally and no data is uploaded anywhere. Chalmers University of Technology. Does the voice recognition logic have anything to do with React. When the AsyncForm is first rendered the MediaUploader is shown. Thank you for being such an amazing role model. It can significantly speed up the writing process, at least after you’ve gotten used to how it works, and is a great alternative for authors who choose not to type, or who can’t type due to complications from something like carpal tunnel. Now you just need to put the finishing touches on your Affirmation. For instance, if you want to recognize Spanish speech, you would use. Rabiner L, Levinson S 1981 Isolated and connected word recognition theory and selected applications. Deepgram supports tailored ASR models optimized with customer specific data, especially important in industries with domain specific jargon, accents, or unique speech patterns. Ultimately, this will lead to better speech understanding capabilities for machines and more natural interactions between humans and machines. SpeechRecognition is a web based API used in Google Chrome, Samsung Internet, and other web browsers to provide speech recognition. Also available via the onnomatch property. As a team, we have faced a few challenges, but we have seen considerable improvements throughout the company. This article, for example, was written using Siri to translate voice to text in Apple’s Notes app. Data/test directory, and then copy the whole. A unique blend of AI and human expertise is what sets Verbit apart from other speech to text software. Perform SOTA Speech2Text on Long Audio Files with/without diarization Using Google Cloud Speech API. However, it is not a fully mature platform, and users may face the occasional bug or issue.

Demo Streaming

USP: Deepgram promises industry leading transcription speed. RWTH RETURNN left and PaddlePaddle DeepSpeech2 right. Writing an acceptance speech can be challenging, so it helps to brainstorm and prepare in advance. This file has the phrase “the stale smell of old beer lingers” spoken with a loud jackhammer in the background. We have some great news. Finally, if using Windows, ensure that Developer Mode is enabled. You need to make an informed choice because it directly affects your workflow and productivity. Add the following code to your component. Custom voice commands. Let’s add more functionality to our application, as we’re currently just listening to the user’s voice and displaying it. Name and address fields for a user’s account. Even short grunts were transcribed as words like “how” for me. The company Dragon in 1990 launched the first speaker recognition product for consumers, Dragon Dictate. Insertion happens when a word that was not said is added for example “He is eating chipotle” becomes “He is always eating chipotle”. One can open the URLs and programs, type configurable text snippets, control the mouse and keywords and simulate shortcuts. Except block is used to catch the RequestError and UnknownValueError exceptions and handle them accordingly. Pricing: Pricing for AssemblyAI starts at $0. Cloud service providers like Google, AWS, and Microsoft offer generic services that you can easily plug and play with. Video Platforms: Real time and asynchronous video captioning are industry standard. These are crucial performance figures, which one must take into account, along with the transcription quality measured in word error rate, when choosing the architecture to be implemented and deployed in embedded applications. Therefore, the more information there is, the more precise the model is statistically speaking and capable of taking into account the general context to assign the best word according to the others already defined. You may then choose to accept the offer, or cancel the transcription of this dictation. Dragon Dictate can assist people who are full of ideas, but have difficulty writing them down. Learn more about arXivLabs.